Customer evolution with SQL: Anonymous clicks to personalized marketing

Backstory:

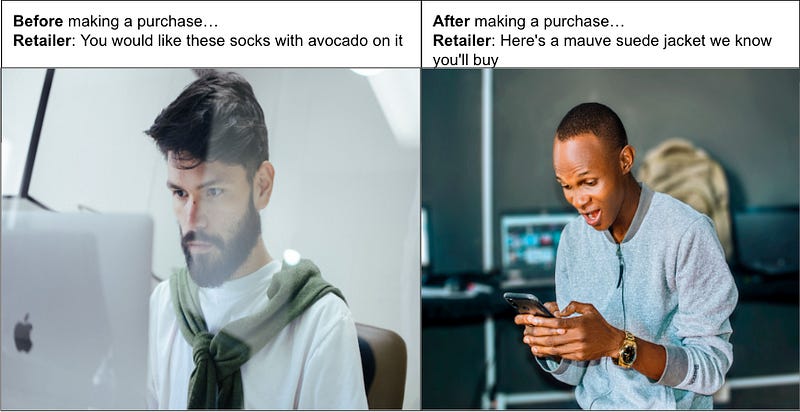

As you know from my previous posts, I’ve been analyzing data from my blog to provide my readers with a better experience. In the last post in this series, we worked on building a 360 view of our customers by extending our user_profile table to account for multiple sources, you can find that here. An idea we touched on last time was updating our knowledge of a customer as we learn more about them through multiple interactions. This time we’ll build on this idea to learn more about how our understanding of a customer evolves and how we can leverage that to make them feel special. What we’ll be doing today will feel like living through the transition of meeting your best friend for the first time and finding them super annoying to learning more about them and finally not knowing what to do without them.

Problem:

If you recollect from the last blog, our user_profile table now has all the data about a user's multiple faces captured through different profiles. This is great because now I have the latest and greatest data about a user which can be used in many ways. However, this doesn’t tell me any history about how we got to this stage. For example, I can identify users who signed up for my mailing list but I have no idea about how they got to this point. Hence, I not only want to know things about a user now, but I’d also like to learn more about their journey. This will help me analyze users that signed up for my mailing list and figure out how I can get others to do the same.

Data:

This time we’re working with a beefed-up version of our user_profile table which is ready to handle anything you through at it. Here’s what this looks like:

+---------+------------+------+-------------+-------------------+

| user_id | profile_id | type | web_details | mail_list_details |

+---------+------------+------+-------------+-------------------+

| 1 | 1 | WEB | ... | ... |

| 1 | 2 | MAIL | ... | ... |

| 2 | 1 | WEB | ... | ... |

| ... | ... | ... | ... | ... |

+---------+------------+------+-------------+-------------------+STOP! Before reading ahead here’s where I want you to take some time and think about the problem yourself before reading my approach.

My approach:

To start off we’ll do some time traveling again and revisit the concept of a current and history table. I’ve already talked about this in an earlier post that can be found here. I highly recommend checking this out for context because I won’t go into much detail here. The major idea is to have two separate tables. The current table will be used to store the latest info about all customer profiles; on the other hand, the history table will be a type 4 table with a record of every change that comes through.

If you’re wondering what may change, I can think about two scenarios. The user profile may be updated with new info, for example, we may capture a change in the user’s device type from android to iOS through clickstream. Or as we talked about last time the profile may move to a different user as we learn more about our users. If we follow a similar approach used for the subscriber_feed_history table earlier, we can build a user_profile_history table. Here’s an example of what this looks like:

+------+---------+------------+------+-----------------+-----+

| date | user_id | profile_id | type | web.device_type | ... |

+------+---------+------------+------+-----------------+-----+

| 1/1 | 123 | 1 | WEB | Android | ... |

| 1/24 | 123 | 1 | WEB | iOS | ... |

| ... | ... | ... | ... | ... | ... |

+------+---------+------------+------+-----------------+-----+Now, this is great but it isn’t intuitive, it doesn’t tell me much about how a user evolved by showing me their attributes and how they changed over time. This is where we enter the realm of aggregate tables built on top of existing tables to make life a little easier.

Let’s think about what we need in our new user_journey aggregate table. Our user_profile_history shows us what’s changing with profiles over time but I need a better way to show how a user evolves. Said differently the grain of this table should be the user_id and we need a time component to capture changes that’ll be represented in a new row. Now with analytics, you can always throw in a bunch of things with a JOIN but a better way is to think about attributes you or your stakeholders want. In my case, I’m interested in seeing how users sign up for my mailing so attributes related to that would make sense. Also, it would be cool to see PII data, for example, when a user first encounters my blog I’ve no idea who they are, but through multiple interactions, I may know their name. Finally, the time period for the change should actually be a window, ranging from when the change was observed to when it was overwritten. Here’s an example of what our user_journey may look like:

+-------+--------+------+----+--------+-------+---------+----------+

|user_id|mail_ind|status|name| zip | device | eff_dte | exp_dte |

+-------+--------+------+----+------+---------+---------+----------+

| 123 | N | - | - | - | Android | 1/1 | 1/23 |

| 123 | N | - | - | - | iOS | 1/24 | 1/30 |

| 123 | Y |Active| XYZ| - | iOS | 1/31 | 2/1 |

| 123 | Y |Active| XYZ| 33139| iOS | 2/2 | 2/12 |

| 123 | Y |Bounce| XYZ| 33139| iOS | 2/13 |9999/12/31|

+-------+--------+------+----+------+---------+---------+----------+The grain here is a combination of user_id and the time interval eff_date(effective) & exp_date(expiry). The mail_ind gives us the mailing list behavior and PII attributes like the zip and name show the transition from an anonymous user to someone we know. In the above example for user_id=123, our first interaction on 1/1 shows the first blog visit. Following that there’s an update showing the same user switched to iOS but we still don’t know much. Then a month later on 1/31 we see the user signs up for my mailing list(smart decision), and now I know their name and email. Finally, our profile matching kicks in to link their mailing list and web profile, filling in their zip. The last update here is the current state with an infinite(9999/12/31) expiry.

All this talk but now let’s see how you actually build such a thing with SQL. You can either choose to rebuild this table daily using user_profile_history OR only make incremental updates this would be both adding a new change row plus updating the existing current row. The decision lies on things like how much data you have or do you need this table updated at all times. Below is what the second approach looks like in SQL:

-- Fetch latest profile updates

WITH latest_update AS (

SELECT *

FROM user_profile_history

WHERE date = CURRENT_DATE() -- Daily basis

),

-- Transform updates for user_journey

latest_update_user_journey AS (

SELECT lu.user_id, sf.mailing_list_ind, sf.email_status_ind,

lu.name, lu.zip, lu.device, lu.date AS eff_date

FROM latest_update lu

LEFT JOIN subscriber_feed sf

USING(user_id)

),

-- Fetch current latest row to be updated

current_latest_user_journey AS (

SELECT * EXCEPT(exp_date)

FROM user_journey

JOIN latest_update

USING(user_id)

WHERE user_journey.exp_date = "9999/12/31"

),

-- Combine the updates

all_updates AS (

SELECT * FROM current_latest_user_journey

UNION ALL

SELECT * FROM latest_update_user_journey

),

-- Calculate expiry date

all_updates_final AS (

SELECT *,

CASE WHEN LEAD(eff_date) OVER(PARRTITION BY user_id

ORDER BY eff_date) IS NULL

THEN "9999/12/31"

ELSE DATE_ADD(LEAD(eff_date) OVER(PARRTITION BY user_id

ORDER BY eff_date), INTERVAL 1 DAY)

END AS exp_date

FROM all_updates

)

-- Merge changes into user_journey

MERGE user_journey AS target

USING all_updates AS source

ON target.user_id = source.user_id

AND target.eff_date = source.eff_date

WHEN MATCHED THEN

UPDATE SET target.exp_date = source.exp_date

WHEN NOT MATCHED

THEN INSERT source.*We start by getting all new changes to user profiles at a user_id level where the latest change becomes the new current row(exp_date=9999/12/31) in our user_journey. However, we also need to update the expiry date in the existing current row, we do this by first fetching this current row for users that have an update(hence the JOIN) and then re-calculating the expiry date in conjunction with new updates. Finally, a merge operation, which is the best way to do an update and insertion simultaneously, makes the desired changes to our user_journey table.

And voila! This gets us our user_journey table which can be used for things like funnel analysis to see the trajectory of my mailing list subscribers and get others to do the same.

Food for thought:

How would you initialize our user_journey table?

How can I extend the user_journey with Ecommerce data from my upcoming merch store?

What does the first approach of building the user_journey table look like?

What other ways can you use the user_journey?

Let me know how you’d approach it. If you found this helpful, share it. If you’re into this, you can find me on Twitter @abhishek27297 where I talk data. If you don’t want to miss my next post, consider subscribing here. It’s free and you’ll be notified when I post my next blog.